Tracking Drone (2017)

[Background]

● Second Prize winner in National Electronic Design Competition

● Led the computer vision hardware and algorithm development

[Function]

● The drone can autonomously take off, hover and land. It can also automatically track and follow a remote-control car (by learning to follow targets of different colors)

[Realization]

● Flight Controller Module: APM (Ardupilot Mega)

● Camera Module: OV7725

● Control Algorithm: Dual-loop PID

● Computer Vision: Tradition CV algorithm on morphology, with color standard conversion

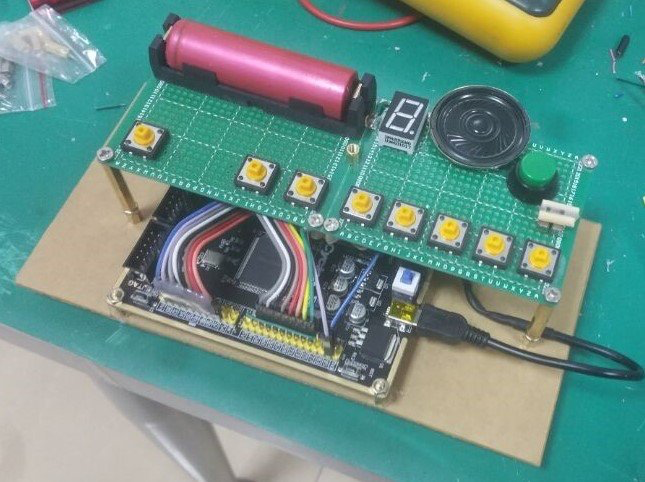

FPGA Electronic Piano (2018)

[Background]

● Course design for learning FPGA

[Function]

● The design is capable of producing a specific tone when corresponding button is pressed, along with the ability to play a pre-set song.

[Realization]

● Programmed on an ALTERA FPGA (CYCLONE IV EP4CE) with Verilog HDL .

● Various tunes are generated using various PWM waves, while the pre-set song is played using a Finite State Machine (FSM).

Gesture Recognizer (2018)

[Background]

● Second Prize Winner in Provincial Electronic Design Competition

[Function]

● Recognize the gestures of the finger-guessing game

● Learn to recognize special gestures

[Realization]

● Two 3-channel capacitance sensors collect capacitance values from multiple locations, enabling recognition and learning functions through the K-means clustering algorithm

● A resistive touch-screen human-computer interface is designed for real-time display

Smart Incubator (Device+Server+APP) (2019)

[Background]

● Graduation Project (School’s Excellent Graduation Design winner, the proportion of which is 2%)

[Function]

● The design realizes:

① the visual tracking of the GPS positioning of the box

② the curve display of real-time temperature

③ the display of the medicine status

④ the monitoring of the lid

● The hardware and software of the system are composed of four weakly-coupled subsystems:

① the incubator system

② the acquisition and communication system

③ the server and host computer system

④ the hardware power supply system

[Realization]

● On the platform of STM32 and with incremental PID arithmetic, adjustable constant temperature storage of medicines is realized. Then an incubator system is constructed

● The server is deployed in Windows Server 2012 and the host computer is based on Android OS. The related programs are written in Java

● The acquisition and communication system adopts multiple sensor modules to collect the environment and state data of the box and upload them to the server through the GPRS communication module. Both acquisition and transmission delays are within 1 second

● 18650 lithium batteries and switched-mode power supply are both applied in the hardware power supply system

Pipe Counting Software (2020)

[Background]

● Successfully deployed in the factory

● Open-sourced on Gitee

[Function]

● Software to assist steel pipes counting tasks to get rid of heavy visual fatigue in factories

● The picture can choose from your computer files, from an independent camera, or even screenshots. What’s more, the final marked picture can be saved

● To avoid some errors of machine vision in complex cases, the final count can be manually corrected

[Realization]

● Computer Vision: Hough Transform

● Coding: Qt (Cross-platform), OpenCV

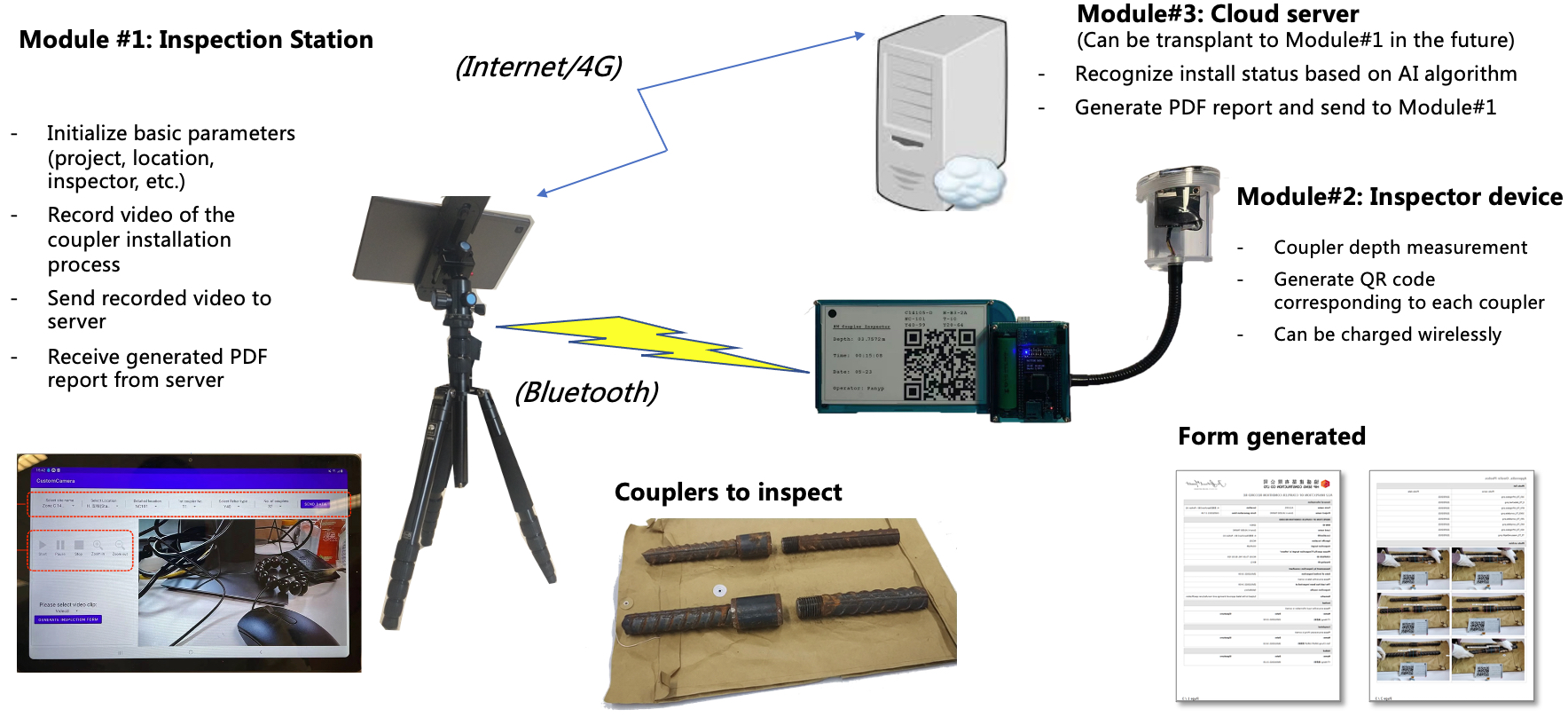

Coupler Inspector (2021)

[Background]

● Adopted by the New World Development Company

● 柏傲莊III兩幢樓宇須拆卸重建 據報涉錯用強度較低混凝土

[Function]

● Android APP sends measurement command via Bluetooth

● E-paper can displayed the depth along with QR-Code of current coupler

● Built-in battery supports charged wirelessly

● Inspection form will be automatically generated on server

i-Core for MiC (2021)

[Background]

● Funded by ITF 2020 (ITP/029/20LP) (details)

● Homepage: e-inspection

● Won the ICT Award (Press: 港大智能城市建造實驗室之跨境組裝合成建築模塊(MiC)物流遙距電子檢測系統榮獲2022香港資訊及通訊科技智慧物流金獎)

[Function]

● The data combined with IMU, GPS, UWB and other sensors will be upload to the universal platform which implied BIM, Blockchain and other technologies, aiming to better manage the building flow and supply chain

● The results can be displayed on Apps and Websites

MetaCam – 3D Scanning Equipment (2022)

[Background]

● Prototypes for Start-up Project of Skyland Innovation

● Took the responsibility of hardware designing, and time synchronization design realized by software and hardware collaboration

[Function]

● Portable and handy devices to get colored point cloud maps

● This board is designed to synchronize peripheral sensors, including LiDAR, IMU, cameras, and motor encoders, Consequently, to improve the effectiveness of LiDAR SLAM

AR Human-Robot Collaboration (2022)

[Background]

● A framework that combined AR HMD (HoloLens) and robot (UR5) to boost the efficiency in waste manual sorting

[Function]

● Contours of waste objects on the table or conveyor belt are detected through a depth camera. The operator selects the object for classification using AR HMD

● If there are any misclassifications by the computer vision sorting algorithms, the operator can correct them using the AR HMD, demonstrating human-robot collaboration and interactive features

RoboAvatar_Urdf2Ifc (2023)

[Background]

● Complete program implementation of Building “RoboAvatar”: Industry Foundation Classes–Based Digital Representation of Robots in the Built Environment

● Bridge the disconnection of the general description of robotics and construction industry. And we believe it contributes to the construction robotics community

● Open-sourced on Github

[Function]

● Fully automated converter from URDF (Unified Robot Description Format) to IFC (Industry Foundation Classes)

● Well-designed GUI with viewers

Scanner for BIM-PointCloud Registration (2024)

[Background]

● Applied as data collector in Global BIM-point cloud registration and association for construction progress monitoring

● An realtime BIM-PointCloud registration system is to be implemented on it

[Function]

● Multi-sensor fusion of LiDAR, Camera and BIM with precise time synchronization triggered by MCU

● Lightweight sensors synchronization solution

Realtime WiFi CSI-Sensing Suite (2024)

[Background]

● On the one hand, SLAM is undoubtedly the most essential part of many robotics tasks, including automatic navigation, environment reconstruction, and motion planning. On the other hand, WiFi, as a typical wireless signal that is almost ubiquitous in indoor environments, have the advantages of cost-free and privacy-preserving for sensing tasks, which has attracted the attention of many researchers

● However, applying WiFi sensing to SLAM always has an unbridgeable gap to overcome. The existing RSSI-based method is low-precision so that far from offering positive optimization for classical SLAM frames. Other CSI-based methods can only work in scenarios of well-designed AP layout, in which multiple APs’ localization should be provided, even APs have to be in LOS. Those requirements are quite inconformity to daily usage of WiFi

[Function]

Easily Setup: plug-and-play on ROS without pre-calibration

● Lenient Signal Requirements: workable with single AP coverage without knowing its localization in both Line-Of-Sight (LOS) or Non-Line-Of-Sight (NLOS) scenarios

● Independence: After being equipped, the original system can still function in WiFi-denied environments

[Contribution]

● Hardware: A WiFi Sensor ready for sensor fusion on robotics system

● Fusion System: A robust and versatile tightly-coupled WiFi-Visual-Inertial Odometry system

● Dataset

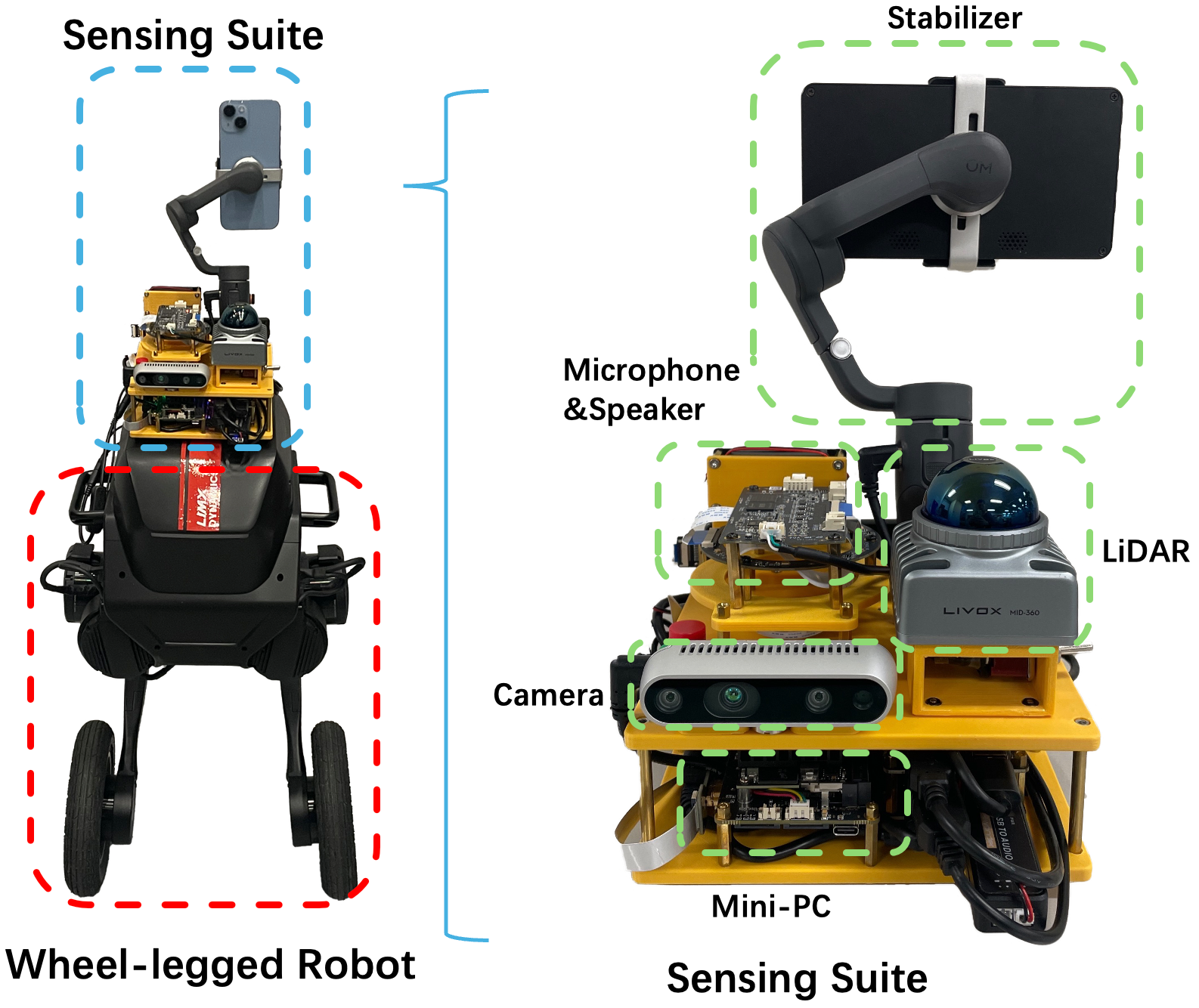

LimX Dynamics Navigation Suite (2024 Ongoing)

[Background]

● A cooperative project with the most popular robotic compony LimX Dynamics

● A Navigation Suite deployed on TRON1 Wheel-legged Robot

Stay Tuned For More Updates…